Deploying VLM with AidGen

Introduction

Edge deployment of Vision Language Models (VLM) refers to the process of compressing, quantifying, and deploying models originally run in the cloud onto local devices to achieve offline, low-latency natural language understanding and generation. This chapter uses the AidGen inference engine as a base to demonstrate the deployment, loading, and dialogue workflow of multi-modal large models on edge devices.

In this case, the multi-modal large model inference runs on the device side. C++ code is used to call relevant interfaces to receive user input and return dialogue results in real-time.

- Device: Rhino Pi-X1

- OS: Ubuntu 22.04

- Model: Qwen2.5-VL-3B (392x392)

Supported Platforms

| Platform | Runtime Method |

|---|---|

| Rhino Pi-X1 | Ubuntu 22.04, AidLux |

Prerequisites

- Rhino Pi-X1 Hardware

- Ubuntu 22.04 system or AidLux system

Deployment Steps

Step 1: Install AidGen SDK

# Update AidGen SDK

sudo aid-pkg update

sudo aid-pkg -i aidgen-sdk

# Copy test code

cd /home/aidlux

cp -r /usr/local/share/aidgen/examples/cpp/aidmlm ./Step 2: Acquire the Model

Since Qwen2.5-VL-3B (392x392) is currently in the Model Farm preview section, it must be obtained via the mms command.

# Login

mms login

# Search for the model

mms list Qwen2.5-VL-3B

# Download the model

mms get -m Qwen2.5-VL-3B-Instruct_392x392_ -p w4a16 -c qcs8550 -b qnn2.36 -d /home/aidlux/aidmlm/qwen2.5-vl-3b-392

cd /home/aidlux/aidmlm/qwen2.5-vl-3b-392

unzip qnn236_qcs8550_cl2048

mv qnn236_qcs8550_cl2048/* /home/aidlux/aidmlm/Step 3: Create Configuration File

cd /home/aidlux/aidmlm

vim config3b_392.jsonCreate the following json configuration file:

{

"vision_model_path":"veg.serialized.bin.aidem",

"pos_embed_cos_path":"position_ids_cos.raw",

"pos_embed_sin_path":"position_ids_sin.raw",

"vocab_embed_path":"embedding_weights_151936x2048.raw",

"window_attention_mask_path":"window_attention_mask.raw",

"full_attention_mask_path":"full_attention_mask.raw",

"llm_path_list":[

"qwen2p5-vl-3b-qnn231-qcs8550-cl2048_1_of_6.serialized.bin.aidem",

"qwen2p5-vl-3b-qnn231-qcs8550-cl2048_2_of_6.serialized.bin.aidem",

"qwen2p5-vl-3b-qnn231-qcs8550-cl2048_3_of_6.serialized.bin.aidem",

"qwen2p5-vl-3b-qnn231-qcs8550-cl2048_4_of_6.serialized.bin.aidem",

"qwen2p5-vl-3b-qnn231-qcs8550-cl2048_5_of_6.serialized.bin.aidem",

"qwen2p5-vl-3b-qnn231-qcs8550-cl2048_6_of_6.serialized.bin.aidem"

]

}The file distribution is as follows:

/home/aidlux/aidmlm

├── CMakeLists.txt

├── test_qwen25vl_abort.cpp

├── test_qwen25vl.cpp

├── demo.jpg

├── embedding_weights_151936x2048.raw

├── full_attention_mask.raw

├── position_ids_cos.raw

├── position_ids_sin.raw

├── qwen2p5-vl-3b-qnn231-qcs8550-cl2048_1_of_6.serialized.bin.aidem

├── qwen2p5-vl-3b-qnn231-qcs8550-cl2048_2_of_6.serialized.bin.aidem

├── qwen2p5-vl-3b-qnn231-qcs8550-cl2048_3_of_6.serialized.bin.aidem

├── qwen2p5-vl-3b-qnn231-qcs8550-cl2048_4_of_6.serialized.bin.aidem

├── qwen2p5-vl-3b-qnn231-qcs8550-cl2048_5_of_6.serialized.bin.aidem

├── qwen2p5-vl-3b-qnn231-qcs8550-cl2048_6_of_6.serialized.bin.aidem

├── veg.serialized.bin.aidem

├── window_attention_mask.rawStep 4: Compile and Run

sudo apt update

sudo apt-get install libfmt-dev nlohmann-json3-dev

mkdir build && cd build

cmake .. && make

mv test_qwen25vl /home/aidlux/aidmlm/

# Run test_qwen25vl after successful compilation

cd /home/aidlux/aidmlm/

./test_qwen25vl "qwen25vl3b392" "config3b_392.json" "demo.jpg" "Please describe the scene in the picture"In the test_qwen25vl.cpp test code, model_type is defined for different model types used as the first argument of the executable. Currently supported types are:

| Model | Type |

|---|---|

| Qwen2.5-VL-3B (392X392) | qwen25vl3b392 |

| Qwen2.5-VL-3B (672X672) | qwen25vl3b672 |

| Qwen2.5-VL-7B (392X392) | qwen25vl7b392 |

| Qwen2.5-VL-7B (672X672) | qwen25vl7b672 |

💡 **Note**

When downloading different models, you must set the corresponding model_type. For example, if you download the Qwen2.5-VL-7B (672X672) model using the command aidllm pull api aplux/Qwen2.5-VL-7B-672x672-8550, you should use model_type = "qwen25vl7b672".

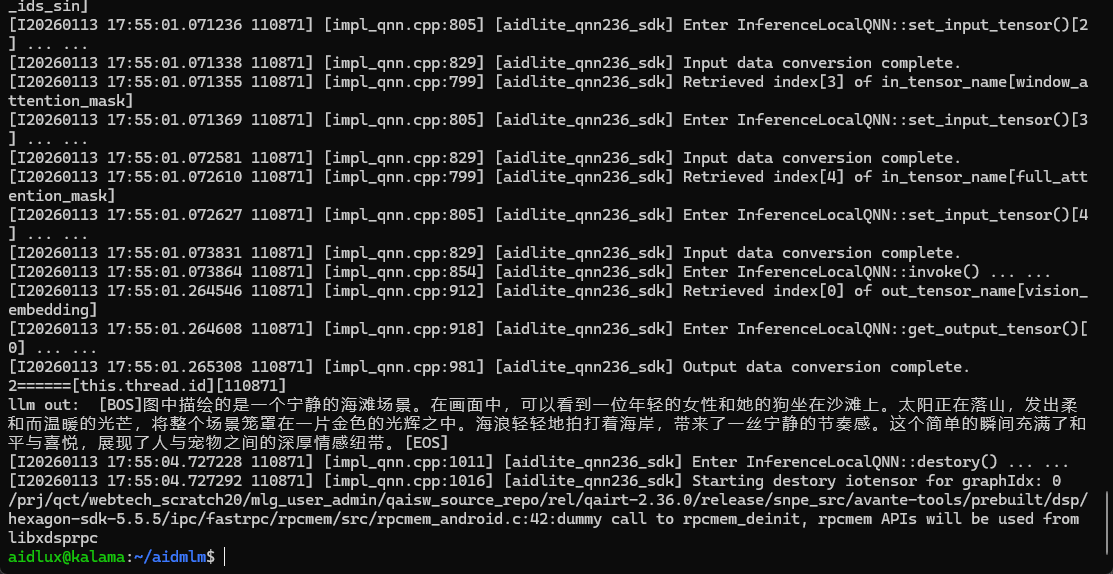

- The running result is shown below: