AidGen SDK Development Documentation

Introduction

AidGen is an inference framework specifically designed for generative Transformer models, built on top of AidLite. It aims to fully utilize various computing units of hardware (CPU, GPU, NPU) to achieve inference acceleration for large models on edge devices.

AidGen is an SDK-level development kit that provides atomic-level large model inference interfaces, suitable for developers to integrate large model inference into their applications.

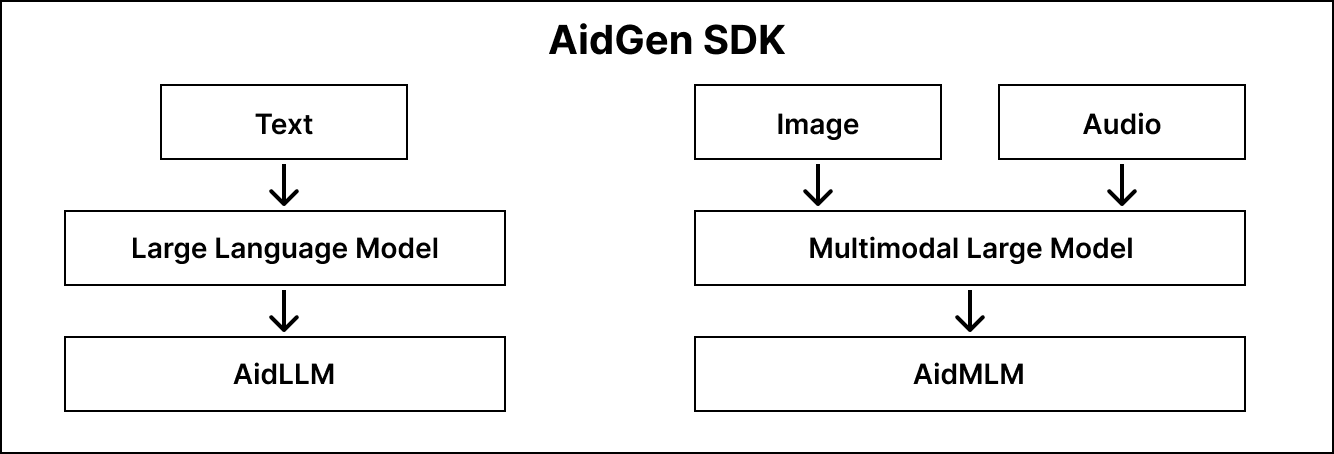

AidGen supports multiple types of generative AI models:

- Language large models -> AidLLM inference

- Multimodal large models -> AidMLM inference

The structure is shown in the diagram below:

💡Note

All large models supported by Model Farm achieve inference acceleration on Qualcomm chip NPUs through AidGen.

Support Status

Model Type Support Status

| AidLLM | AidMLM | |

|---|---|---|

| Text | ✅ | / |

| Image | / | 🚧 |

| Audio | / | 🚧 |

✅: Supported 🚧: Planned support

Operating System Support Status

| Linux | Android | |

|---|---|---|

| C++ | ✅ | / |

| Python | 🚧 | / |

| Java | / | 🚧 |

✅: Supported 🚧: Planned support

Large Language Model AidLLM SDK

Installation

sudo aid-pkg -i aidgen-sdkModel File Acquisition

Model files and default configuration files can be downloaded directly through the Model Farm Large Model Section

Example

- Deploying Qwen2.5-0.5B-Instruct on Qualcomm QCS8550

- Deploying Qwen3 Series on Qualcomm QCS8550

- Deploying HY-MT1.5-1.8B on Qualcomm QCS8550

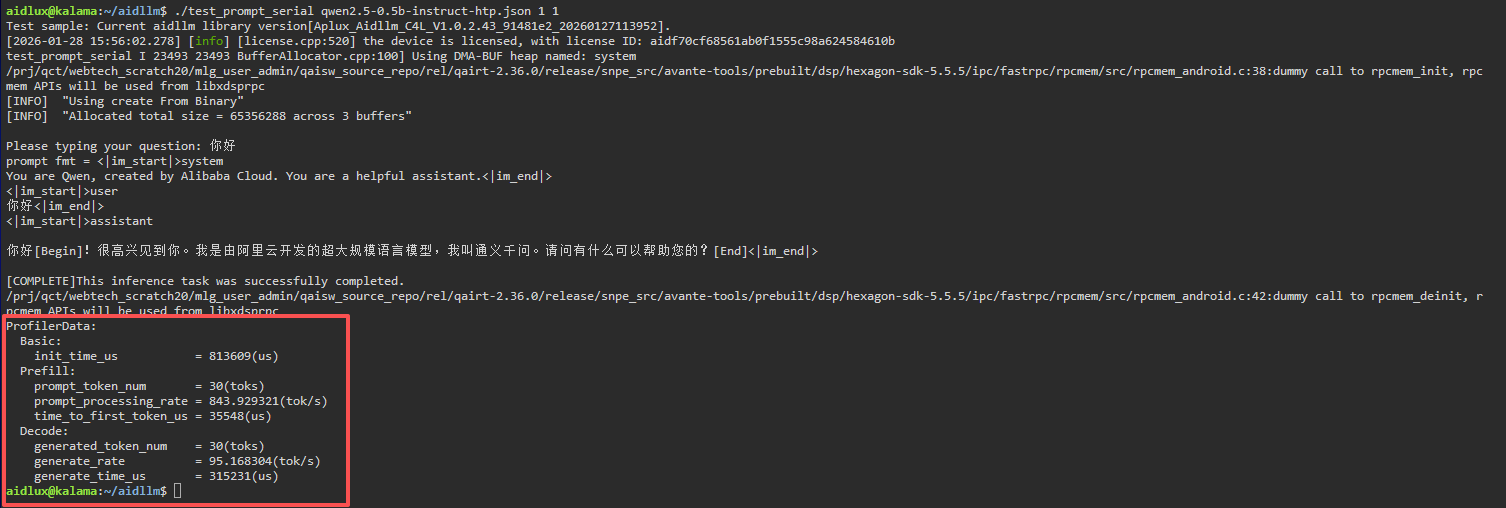

Model Performance Monitoring

💡Note

Please ensure that the sample application can be executed successfully in its entirety.

Taking the example Deploying Qwen2.5-0.5B-Instruct on Qualcomm QCS8550

# Install dependencies

sudo apt update

sudo apt install libfmt-dev

# Compile

mkdir build && cd build

cmake .. && make

mv test_prompt_serial /home/aidlux/aidllm/

# Run after successful compilation

# The first parameter `1` enables profiler statistics

# The second parameter `1` specifies the number of inference iterations

cd /home/aidlux/aidllm/

./test_prompt_serial qwen2.5-0.5b-instruct-htp.json 1 1- After entering the conversation content in the terminal, you will see the following log information:

Multi-modal Vision Model AidMLM SDK

Model Support Status

| Model | Status |

|---|---|

| Qwen2.5-VL-3B-Instruct | ✅ |

| Qwen2.5-VL-7B-Instruct | ✅ |

| InternVL3-2B | 🚧 |

| Qwen3-VL-4b | 🚧 |

| Qwen3-VL-2b | 🚧 |

Installation

sudo aid-pkg update

sudo aid-pkg -i aidgen-sdkModel File Acquisition

Acquire and download models directly via command line.

sudo aid-pkg -i aidgense

# View supported models

aidllm remote-list api

# Pull a model

aidllm pull api [Url]

# List downloaded models

aidllm list api

# Remove a downloaded model

sudo aidllm rm api [Name]Example

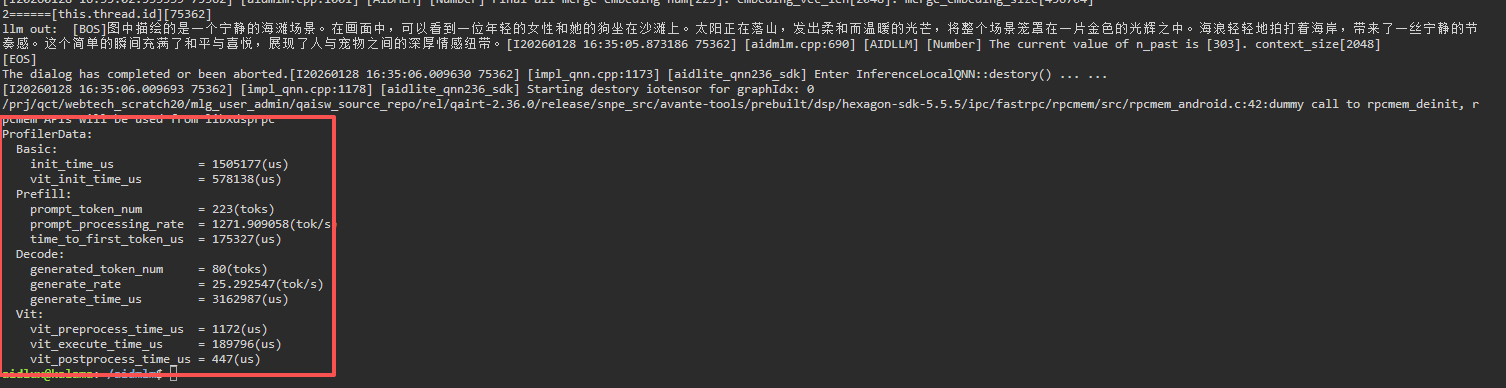

Model Performance Monitoring

💡Note

Please ensure that the sample application can be executed successfully in its entirety.

Taking the example Deploying Qwen2.5-VL-3B-Instruct (392x392) on Qualcomm QCS8550

sudo apt update

sudo apt-get install libfmt-dev nlohmann-json3-dev

mkdir build && cd build

cmake .. && make

# Run after successful compilation

# The first parameter `1` enables profiler statistics

mv test_qwen25vl /home/aidlux/aidmlm/

cd /home/aidlux/aidmlm/

./test_qwen25vl "qwen25vl3b392" "config3b_392.json" "demo.jpg" "Please describe the scene in the picture" 1- After entering the conversation content in the terminal, you will see the following log information: