Deploying LLM with AidGen

Introduction

Deploying a Large Language Model (LLM) on edge devices refers to compressing, quantizing, and deploying large models that originally run in the cloud to local devices, enabling offline, low-latency natural language understanding and generation. This chapter demonstrates how to complete the deployment, loading, and dialogue process of a large language model on an edge device based on the AidGen inference engine.

In this case, the large language model inference runs on the device side, and relevant interfaces are called through C++ code to receive user input and return dialogue results in real time.

- Device: Rhino Pi-X1

- System: Ubuntu 22.04

- Model: Qwen2.5-0.5B-Instruct

Supported Platforms

| Platform | Running Method |

|---|---|

| Rhino Pi-X1 | Ubuntu 22.04, AidLux |

Preparation

- Rhino Pi-X1 hardware

- Ubuntu 22.04 system or AidLux system

- Prepare model files

Visit Model Farm: Qwen2.5-0.5B-Instruct to download the model resource files.

💡Note

Select the QCS8550 chip.

Case Deployment

Step 1: Install AidGen SDK

# Install AidGen SDK

sudo aid-pkg update

sudo aid-pkg -i aidgen-sdk

# Copy test code

cd /home/aidlux

cp -r /usr/local/share/aidgen/examples/cpp/aidllm .Step 2: Upload & Unzip Model Resources

- Upload the downloaded model resources to the edge device.

- Unzip the model resources to the

/home/aidlux/aidllmdirectory:

cd /home/aidlux/aidllm

unzip Qwen2.5-0.5B-Instruct_Qualcomm\ QCS8550_QNN2.29_W4A16.zip -d .Step 3: Confirm Resource Files

The file distribution is as follows:

/home/aidlux/aidllm

├── CMakeLists.txt

├── test_prompt_abort.cpp

├── test_prompt_serial.cpp

├── aidgen_chat_template.txt

├── chat.txt

├── htp_backend_ext_config.json

├── qwen2.5-0.5b-instruct-htp.json

├── qwen2.5-0.5b-instruct-tokenizer.json

├── qwen2.5-0.5b-instruct_qnn229_qcs8550_4096_1_of_2.serialized.bin

├── qwen2.5-0.5b-instruct_qnn229_qcs8550_4096_2_of_2.serialized.binStep 4: Set Dialogue Template

💡Note

For the dialogue template, refer to the aidgen_chat_template.txt file in the model resource package.

Modify the test_prompt_serial.cpp file according to the large model's template:

if(prompt_template_type == "qwen2"){

prompt_template = "<|im_start|>system\nYou are Qwen, created by Alibaba Cloud. You are a helpful assistant.<|im_end|>\n<|im_start|>user\n{0}<|im_end|>\n<|im_start|>assistant\n";

}Step 5: Compile and Run

# install dependency

sudo apt update

sudo apt install libfmt-dev

# compile

mkdir build && cd build

cmake .. && make

# Run after successful compilation

# The first parameter `1` enables profiler statistics

# The second parameter `1` specifies the number of inference iterations

mv test_prompt_serial /home/aidlux/aidllm/

cd /home/aidlux/aidllm/

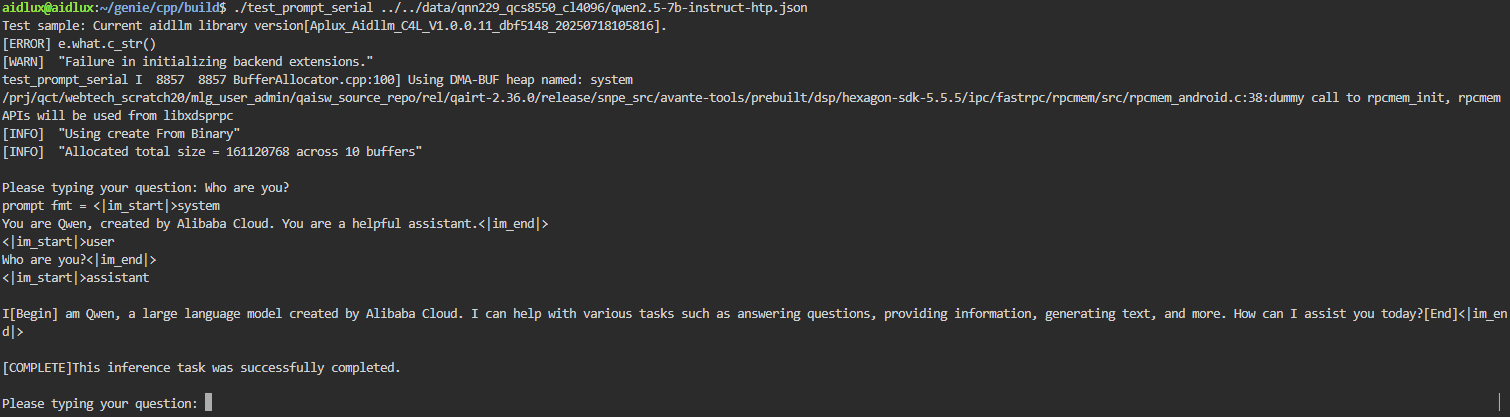

./test_prompt_serial qwen2.5-0.5b-instruct-htp.json 1 1- Enter dialogue content in the terminal.